Client

Context

RC vehicles are normally controlled by a remote controller via radio signals. Although a good solution for short distancies, it fails in operations that require the operator and vehicle to be separated by a long distance. For example, there could be a need for a RC vehicle accomplish a task where humans shouldn't be directly involved in.

WebRemote is a service that allows the user to control a vehicle through the web. By having access to the Internet, the operator and the vehicle can interact independently of the distance that separates them.

Features

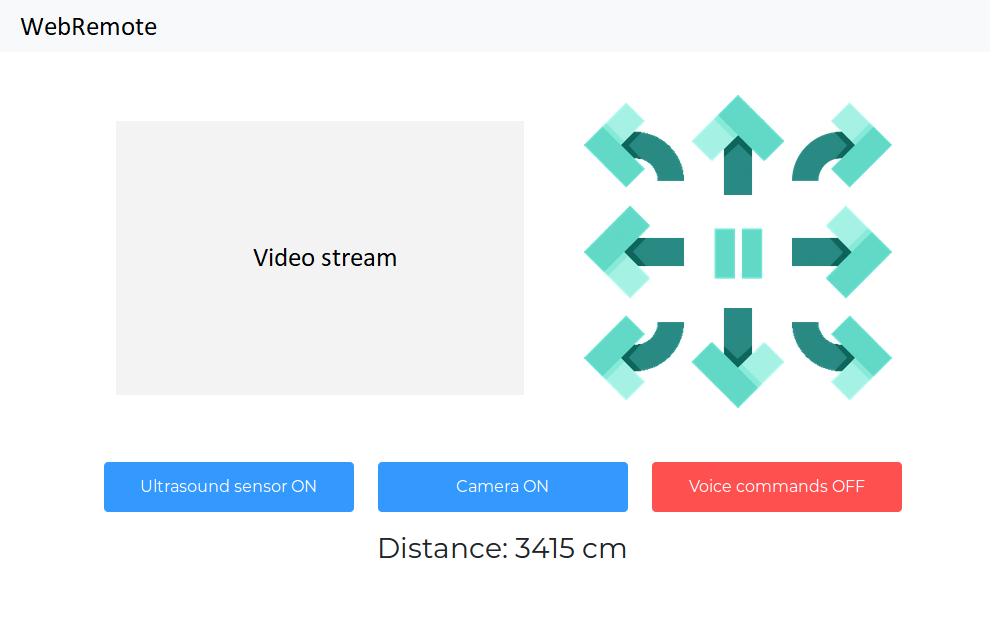

The web page used to control the vehicle allows for two methods of interaction:

- by clicking in the directional buttons

- by voice

The web page also displays a video stream from the perspective of the vehicle.

Due to the nature of the technology, remote controlling the car through the web comes with added lag. In order to prevent the vehicle from crashing into walls or any other obstacle, it comes with ultrasound sensors that allow for collision detection.

Visual Concept

Web page:

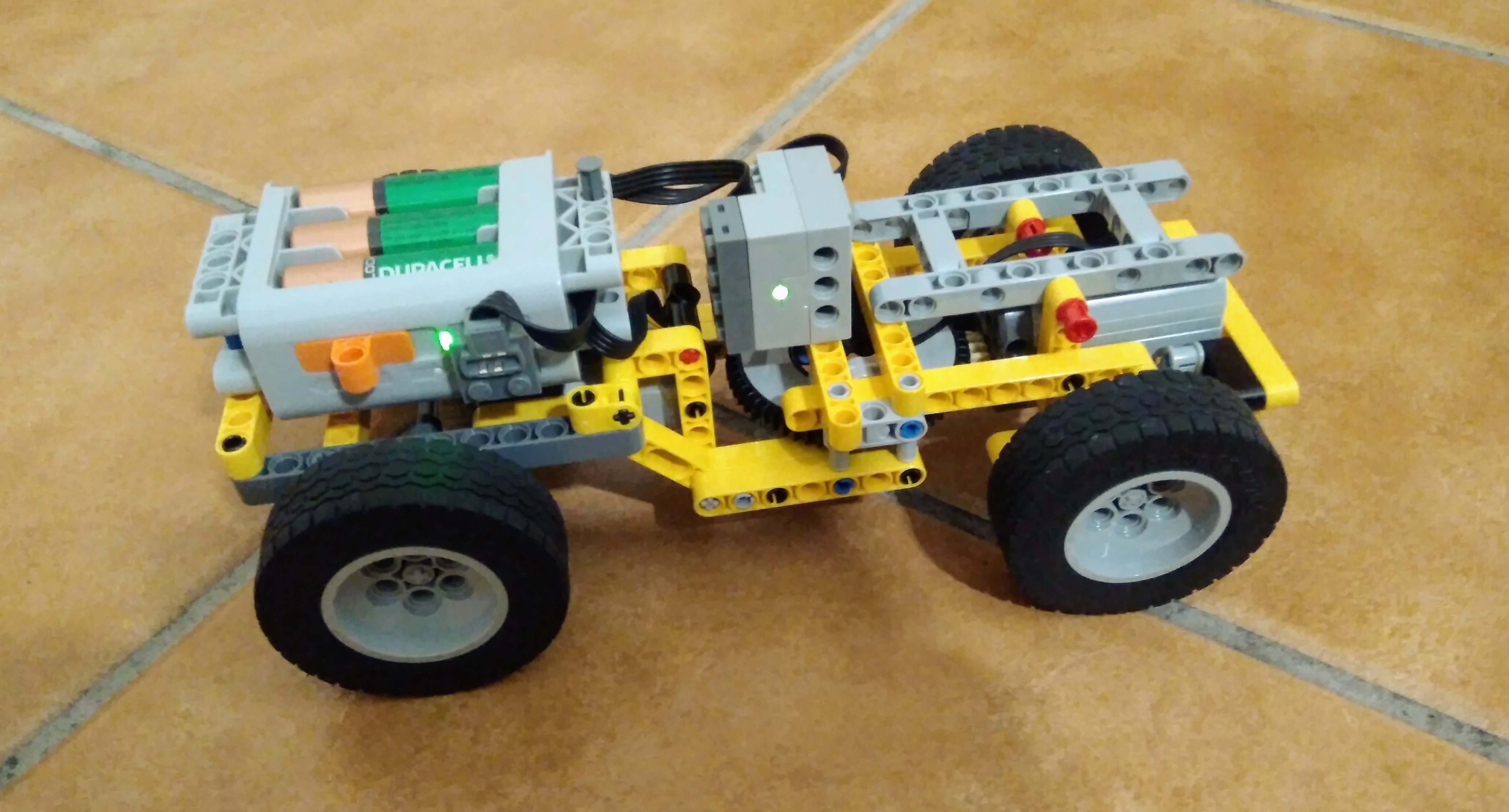

Remote control car:

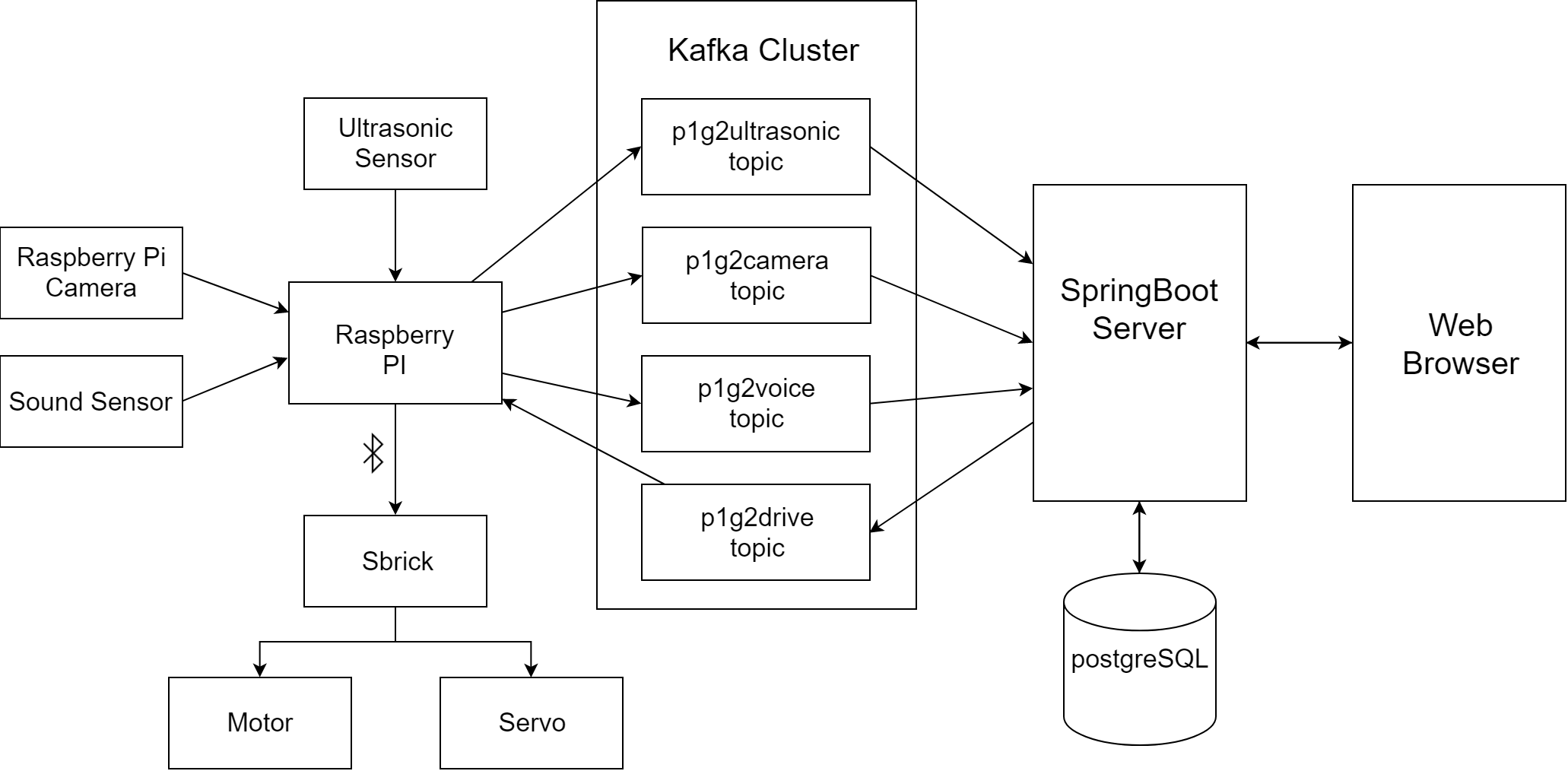

Architecture

- Ultrasonic sensor

- In a python file, Raspberry Pi continuously calculates the distance to obstacle and sends it to Kafka.

- Raspberry Pi Camera

- In another python file, Raspberry Pi chooses the resolution and captures a photo then encode the image in bytes and sends to Kafka.

- Sound sensor

- A voice sensor is used to capture the voice commands made by a user.

- Sbrick

- Sbrick receives comands from Raspberry Pi through bluetooth, and makes the motor and servo move or stop.

- Raspberry Pi

- Raspberry has python files running, two already explained before. The next file receives messages from Kafka that can be drive or stop messages and sends comands to sbrick accordingly.

- Kafka cluster

- The kafka broker stores the various information shared between the Raspberry PI, the Springboot application and the webpage.

It has the following topics:

- p1g2drive: for receiving the commands and sending them to the car

- p1g2ultrasonic: for receiving the current distance of the car from an obstacle and sending it to the web browser

- p1g2camera: for receiving the camera frames and sending them to the web browser

- SpringBoot Server

- The Springboot web application coordinates the flow of information, have to deal with processing of the ultrasonic sensor, camera sensor and commands data in real time. It also provides REST API for the web page to query information about the camera frames and the ultrasonic sensor data. It connects to the PostgreSQL database to store the history of the commands executed by the user, with the respective timestamp.

- Web Browser

- The web page receives the camera frame data and the ultrasonic sensor data in real time and shows them to the user. It also has a set of buttons to control the car.

- PostgreSQL database

- We use a PostgreSQL database to store the commands executed by the user. Each record has associated with it the timestamo when it was executed. This type of database was chosen in order to easily retrieve information between a certain time interval.

Technical Requirements

In terms of data, the application will handle live streaming of frame data from the camera, ultrasound data and the JSON commands sent by the web page.- Raspberry Pi 3 Model B V1.2

- Raspberry Pi Camera V2.1

- 2 x HC-SR04 (Ultrasound sensor)

- Waveshare Sound sensor V2 (microphone)

- 1 or 2 breadboard(s)

- Jumpers

- Lego car:

- Sbrick (controller)

- LEGO Extension Wire

- LEGO Power Functions Servo Motor

- LEGO Power Functions L-Motor

- LEGO® Power Functions Battery Box

- Batteries

Vision

Scenarios

a) User controls vehicle using directional buttons

- User A turns on the RC vehicle

- User B connects to the website

- Website displays to the user B the directional arrows, the video stream and the voice activation button

- User B presses the forward button

- The vehicle goes forward and the video of the corresponding action is displayed to the user

b) User controls vehicle by voice

- User A turns on the RC vehicle

- User B connects to the website

- Website displays to the user B the directional arrows, the video stream and the voice activation button

- User B presses the button to activate speech recognition

- User B speaks "forward"

- The vehicle goes forward and the video of the corresponding action is displayed to the user

c) User controls vehicle to crash into a wall

- User A turns on the RC vehicle

- User B connects to the website

- Website displays to the user B the directional arrows, the video stream and the voice activation button

- User B presses the forward button

- The vehicle goes forward and stops due to an obstacle

Persona

My name is Daniel and I'm 26 years old. I'm a Software developer and also a volunteer fire fighter in the monitoring department. I'm very passionate about technology

but I also enjoy going to the country side to recharge my batteries.

One of my goals in life is to preserve the deforestation caused by fires and reducing the destruction of natural habitats.

Developer

Pipeline Architecture

Original source: https://www.edureka.co/blog/devops-tools

The deployment pipeline used for this project is based on continuous integration using Jenkins. In order to monitor the application, the ELK stack is used to gather all the logs and store them in a NoSQL type database.

Team

Diogo Guedes

Developer

Carlos Ribeiro

Developer

Jorge Faustino

Developer

Gonçalo Ferreira

Developer